Rate limit Api endpoint with redis 'GCRA' algorithm working example

Recently, I was working on a project that required rate limiting an endpoint. I found a lot of articles on the internet and used two ways of them.

One of them I explained in this article

Again, for more theory, you can use this article on the internet that I found

I will show you my working example. Install Redis. I will use Rocky Linux 9 for this example.

1 Install Redis service

sudo yum install redis2 Enable, Start and check the status of Redis

sudo systemctl enable redis

sudo systemctl start redis

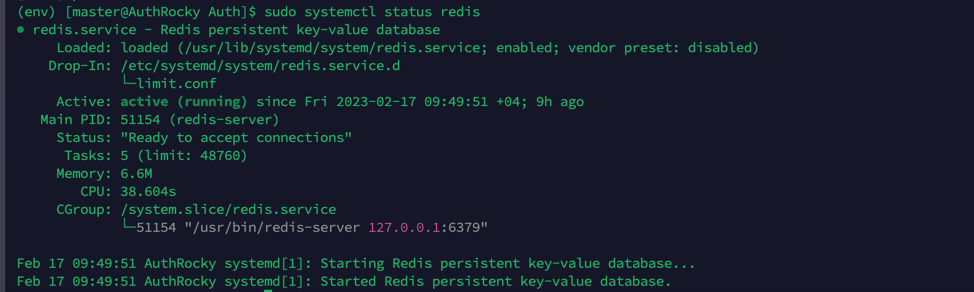

sudo systemctl status redisIt should look like this:

next, we need to install the python client for Redis

3 Install Redis python client

pip install redisThis time we are not linked to a framework, you can use this guide for any framework you use.

4 put the following code in your project

def request_is_limited(redis_conn: Redis, key: str, limit: int, period: timedelta) -> bool:

period_in_seconds = int(period.total_seconds())

now = redis_conn.time()[0]

separation = period_in_seconds / limit

redis_conn.setnx(key, 0)

try:

with redis_conn.lock(f"lock:{key}", blocking_timeout=5):

theoretical_arrival_time = max(float(redis_conn.get(key)) or now, now)

if theoretical_arrival_time - now <= period_in_seconds - separation:

new_arrival_time = max(theoretical_arrival_time, now) + separation

redis_conn.set(key, new_arrival_time)

return False

return True

except LockError:

return TrueThis is the main function that does the rate limiting. It uses the GCRA algorithm which is a special case of the leaky bucket algorithm. Instead of simulating a leak this one computes a “theoretical arrival time” (TAT) that the next request would have to meet. After each successful request, the TAT is increased by a small amount.

if users make requests faster than the TAT, they will be rate limited.

5 use it in your project

in my case, I had too many auth attempts from the same user, so I used this function to rate limit the login endpoint.

class TooManyRequestsExeption(Exception):

name = "TooManyRequestsExeption"

redis_connection = Redis(host="localhost", port=6379, db=0)

username = attrs["username"]

password = attrs["password"]

if request_is_limited(redis_connection, username, 30, timedelta(seconds=60)):

raise TooManyRequestsExeption("Too many requests")6 Test Functionality

request_count = 100

url = "http://localhost:8001/auth/"

payload = json.dumps({"username": "123456789", "password": "123456"})

headers = {"Content-Type": "application/json"}

for _ in range(request_count):

response = requests.request("POST", url, headers=headers, data=payload)

if response.status_code == 200:

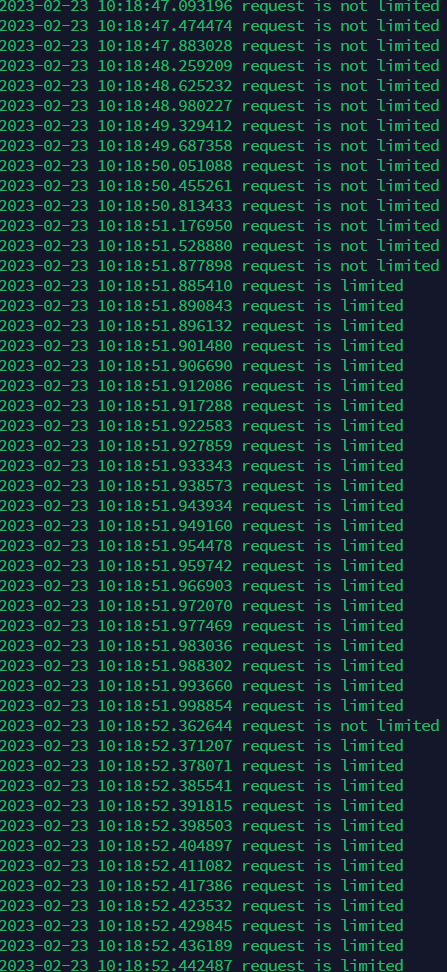

print(datetime.datetime.now(), "request is not limited")

else:

print(datetime.datetime.now(), "request is limited")when we reach the limit, we respond with a 429 status code:

As you can see, when we reach the limit, requests are denied. But every some time when current time catches up to the TAT more requests can be made.

7 Conclusion

It turns out this method is very memory efficient since it only needs to store a few variables to do this.

I hope this article was helpful to you.